Michelangelo Conserva

|

"Caminante, no hay camino: se hace camino al andar."

Ph.D. candidate at Queen Mary University of London, United Kingdom. Links:

|

Professional Experience

Spatially-aware graph neural networks for multivariate time series forecasting with a learned graph structure

Research intern at Google Research, Kenya

Publications

Posterior Sampling for Deep Reinforcement Learning

R. Sasso, M. Conserva, and P. Rauber

ICML 2023

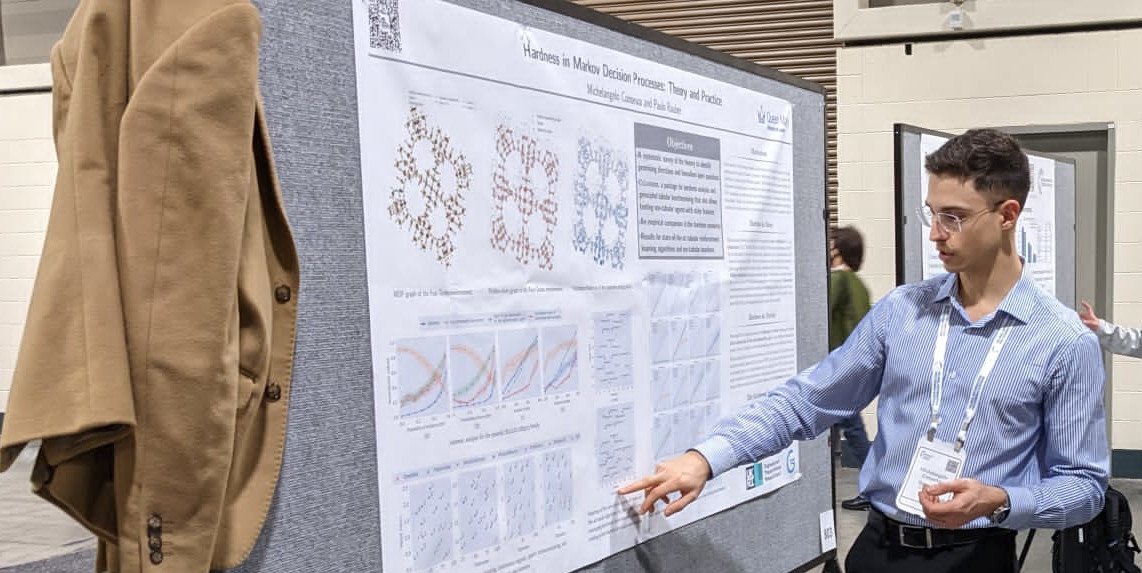

M. Conserva and P. Rauber

NeurIPS 2022

A. Ramesh, M. Conserva, P. Rauber, and J. Schmidhuber

Neural Computation 2022

M. Conserva, M. Deisenroth, and K. S. S. Kumar

Transactions on Machine Learning Research 2022

Education

Ph.D. in Computer Science, Queen Mary University of London, UK, 2020 - 2024.

MSc in Computational Statistics and Machine Learning, University College London, UK, 2019 - 2020, Distinction.

BSc in Statistic, Economics and Finance, Sapienza University of Rome, 2016 - 2019, 110 cum laude.

Pictures